Shrink LVM with Software RAID¶

Contents

Introduction¶

This guide will show you how you can shrink a Linux software RAID devices that are being used by Linux’s Logical Volume Manager (LVM).

In the scenario described below we have a Centos 7.5 server with two identical hard disks: /dev/sda and /dev/sdb. Both are 20GB in size.

Linux software RAID has been used to create three disk devices from these two hard disks using RAID level 1 (mirroring).

The table below describes the file system layout:

| RAID | Size | Type | Devices | Mount Point |

|---|---|---|---|---|

| md125 | 18GB | lvm |

|

/ |

| md126 | 465MB | ext4 |

|

/boot |

| md127 | 2GB | swap |

|

swap |

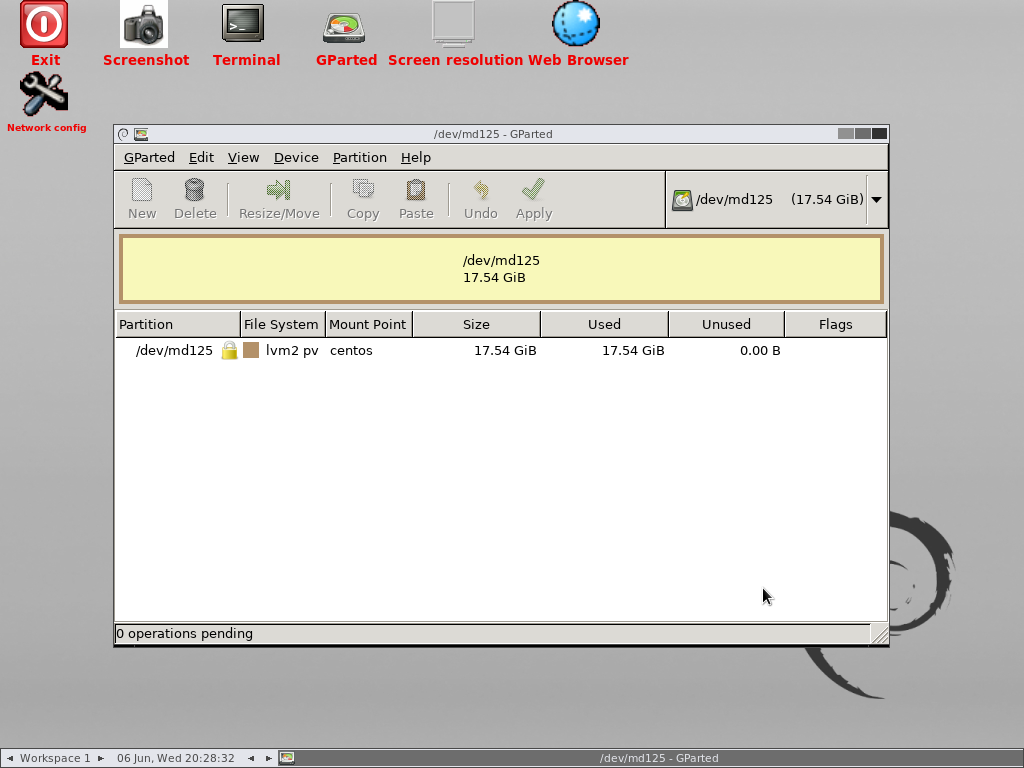

The volume group is called centos and it contains one logical volume called root formatted to ext4 which contains one physical volume md125. This contains the CentOS root file system.

We will be shrinking the root volume group to create free space that can be used to make /boot bigger.

The final outcome will therefore be thus:

| RAID | Size | Type | Devices | Mount Point |

|---|---|---|---|---|

| md125 | 17GB | lvm |

|

/ |

| md126 | 1GB | ext4 |

|

/boot |

| md127 | 2GB | swap |

|

swap |

Method¶

The operations to be formed as follows:

- Boot the system using gparted live CD.

- Shrink the root partition.

- Shrink the root logical volume.

- Shrink the physical volume.

- Shrink the RAID device

/dev/md127. - Fail and remove

/dev/sdafrom all RAID arrays. - Delete all partitions from

/dev/sda. - Recreate RAID partitions on

/dev/sdawith adjusted sizes. - Add

/dev/sdaback to all RAID arrays. - Wait for arrays to sync.

- Fail and remove

/dev/sdbfrom all RAID arrays. - Delete all partitions from

/dev/sdb. - Recreate RAID partitions on

/dev/sdbwith adjusted sizes. - Add

/dev/sdbback to all RAID arrays. - Wait for arrays to synchronise.

- Grow resized file systems and devices to us new space.

- Reboot the system back into CentOS.

Boot the system using gparted live media¶

To perform the tasks described below, we will be using the gparted live CD You can download the gparted live CD ISO and burn it to a CD/DVD or download the gparted live USB

Once you have created the gparted live media, reboot the server from the gparted live media.

Answer the questions using the defaults unless you want to change the language used.

Once booted you will be presented with the following:

Load the terminal application and switch user to root.

sudo su -

Free up some space¶

Check the root partiton:

root@debian:~# e2fsck -f /dev/centos/root

e2fsck 1.44.0 (7-Mar-2018)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

/dev/centos/root: 26004/979200 files (0.1% non-contiguous), 347482/3932160 blocks

Shrink the root partition:

root@debian:~# resize2fs /dev/centos/root 14G

resize2fs 1.44.0 (7-Mar-2018)

Resizing the filesystem on /dev/centos/root to 3670016 (4k) blocks.

The filesystem on /dev/centos/root is now 3670016 (4k) blocks long.

Next shrink the logical volume. Note: we do not shrink the logical volume as much to ensure no data is lost:

root@debian:~# lvreduce -L 15G /dev/centos/root

WARNING: Reducing active logical volume to 15.00 GiB.

THIS MAY DESTROY YOUR DATA (filesystem etc.)

Do you really want to reduce centos/root? [y/n]: y

Size of logical volume centos/root changed from <17.54 GiB (4490 extents) to 15.00 GiB (3840 extents).

Logical volume centos/root successfully resized.

Then we shrink the physical volume, again taking care to ensure no data is lost:

root@debian:~# pvresize --setphysicalvolumesize 16G /dev/md125

/dev/md125: Requested size 16.00 GiB is less than real size 17.54 GiB. Proceed? [y/n]: y

WARNING: /dev/md125: Pretending size is 33554432 not 36790272 sectors.

Physical volume "/dev/md125" changed

1 physical volume(s) resized / 0 physical volume(s) not resized

Now the physical volume has been reduced in size the RAID array needs to be shrunk also. The new size must be specified in KB and must be divisible by 64.

echo "16 * 1024 * 1024" | bc

This gives the result: 16777216 which we can use to shrink the RAID array:

root@debian:~# mdadm --grow /dev/md125 --size=16777216

mdadm: component size of /dev/md125 has been set to 16777216K

And then grow the physical volume to use any remaining space from the last operation:

root@debian:~# pvresize /dev/md125

Physical volume "/dev/md125" changed

1 physical volume(s) resized / 0 physical volume(s) not resized

Remove the first drive from the RAID arrays¶

We will now remove all /dev/sda device partitions from all of the RAID arrays so that we can work on the drive in the subsequent section.

root@debian:~# mdadm --manage /dev/md125 --fail /dev/sda3

mdadm: set /dev/sda3 faulty in /dev/md125

root@debian:~# mdadm --manage /dev/md125 --remove /dev/sda3

mdadm: hot removed /dev/sda3 from /dev/md125

root@debian:~# mdadm --manage /dev/md126 --fail /dev/sda2

mdadm: set /dev/sda1 faulty in /dev/md126

root@debian:~# mdadm --manage /dev/md126 --remove /dev/sda2

mdadm: hot removed /dev/sda2 from /dev/md126

root@debian:~# mdadm --manage /dev/md127 --fail /dev/sda1

mdadm: set /dev/sda1 faulty in /dev/md127

root@debian:~# mdadm --manage /dev/md127 --remove /dev/sda1

mdadm: hot removed /dev/sda1 from /dev/md127

Re-create partitions on first drive¶

Delete the existing partitions on /dev/sda using parted:

root@debian:~# parted /dev/sda

GNU Parted 3.2

Using /dev/sda

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) rm 1

(parted) rm 2

(parted) rm 3

(parted) quit

Information: You may need to update /etc/fstab.

Now create the new partitions using fdisk in the following order:

- /boot

- swap

- /

root@debian:~# fdisk /dev/sda

Welcome to fdisk (util-linux 2.31.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-41943039, default 2048): 2048

Last sector, +sectors or +size{K,M,G,T,P} (2048-41943039, default 41943039): +1G

Created a new partition 1 of type 'Linux' and of size 1 GiB.

Partition #1 contains a linux_raid_member signature.

Do you want to remove the signature? [Y]es/[N]o: y

The signature will be removed by a write command.

Command (m for help): a

Selected partition 1

The bootable flag on partition 1 is enabled now.

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'.

Command (m for help): print

Disk /dev/sda: 20 GiB, 21474836480 bytes, 41943040 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x000a3d32

Device Boot Start End Sectors Size Id Type

/dev/sda1 * 2048 2099199 2097152 1G fd Linux raid autodetect

Filesystem/RAID signature on partition 1 will be wiped.

Command (m for help): n

Partition type

p primary (1 primary, 0 extended, 3 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (2-4, default 2):

First sector (2099200-41943039, default 2099200):

Last sector, +sectors or +size{K,M,G,T,P} (2099200-41943039, default 41943039): +2G

Created a new partition 2 of type 'Linux' and of size 2 GiB.

Command (m for help): t

Partition number (1,2, default 2): 2

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'.

Command (m for help): p

Disk /dev/sda: 20 GiB, 21474836480 bytes, 41943040 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x000a3d32

Device Boot Start End Sectors Size Id Type

/dev/sda1 * 2048 2099199 2097152 1G fd Linux raid autodetect

/dev/sda2 2099200 6293503 4194304 2G fd Linux raid autodetect

Filesystem/RAID signature on partition 1 will be wiped.

Command (m for help): n

Partition type

p primary (2 primary, 0 extended, 2 free)

e extended (container for logical partitions)

Select (default p):

Using default response p.

Partition number (3,4, default 3): 3

First sector (6293504-41943039, default 6293504):

Last sector, +sectors or +size{K,M,G,T,P} (6293504-41943039, default 41943039):

Created a new partition 3 of type 'Linux' and of size 17 GiB.

Command (m for help): t

Partition number (1-3, default 3): 3

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'.

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

The drive’s partitions are now ready to be added back into the RAID arrays:

root@debian:~# mdadm --manage /dev/md125 --add /dev/sda3

mdadm: added /dev/sda3

root@debian:~# mdadm --manage /dev/md126 --add /dev/sda1

mdadm: added /dev/sda1

root@debian:~# mdadm --manage /dev/md127 --add /dev/sda2

mdadm: added /dev/sda1

Check the progress like so:

root@debian:~# cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md125 : active raid1 sda3[2] sdb3[1]

16777216 blocks super 1.2 [2/1] [_U]

[=>...................] recovery = 5.9% (994432/16777216) finish=16.6min speed=15798K/sec

bitmap: 1/1 pages [4KB], 65536KB chunk

md126 : active raid1 sda1[2] sdb2[1]

499712 blocks super 1.2 [2/1] [_U]

resync=DELAYED

bitmap: 0/1 pages [0KB], 65536KB chunk

md127 : active raid1 sda2[2] sdb1[1]

2055168 blocks super 1.2 [2/1] [_U]

resync=DELAYED

Warning

Wait for all arrays to finish synchronising before proceeding (see below):

root@debian:~# cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md125 : active raid1 sda3[2] sdb3[1]

16777216 blocks super 1.2 [2/2] [UU]

bitmap: 0/1 pages [0KB], 65536KB chunk

md126 : active raid1 sda1[2] sdb2[1]

499712 blocks super 1.2 [2/2] [UU]

bitmap: 0/1 pages [0KB], 65536KB chunk

md127 : active raid1 sda2[2] sdb1[1]

2055168 blocks super 1.2 [2/2] [UU]

Remove the second drive from the RAID arrays¶

We will now remove all /dev/sda device partitions from all of the RAID arrays so that we can work on the drive in the subsequent section.

root@debian:~# mdadm --manage /dev/md125 --fail /dev/sdb3

mdadm: set /dev/sdb3 faulty in /dev/md125

root@debian:~# mdadm --manage /dev/md125 --remove /dev/sdb3

mdadm: hot removed /dev/sdb3 from /dev/md125

root@debian:~# mdadm --manage /dev/md126 --fail /dev/sdb2

mdadm: set /dev/sdb1 faulty in /dev/md126

root@debian:~# mdadm --manage /dev/md126 --remove /dev/sdb2

mdadm: hot removed /dev/sdb2 from /dev/md126

root@debian:~# mdadm --manage /dev/md127 --fail /dev/sdb1

mdadm: set /dev/sdb1 faulty in /dev/md127

root@debian:~# mdadm --manage /dev/md127 --remove /dev/sdb1

mdadm: hot removed /dev/sdb1 from /dev/md127

Re-create partitions on second drive¶

Now we can remove the partitions using parted and recreate them using fdisk using same steps above that were used for /dev/sda, but using /dev/sdb this time.

Once the partitions have been recreated we can add them back to the RAID arrays:

root@debian:~# mdadm --manage /dev/md125 --add /dev/sdb3

mdadm: added /dev/sdb3

root@debian:~# mdadm --manage /dev/md126 --add /dev/sdb1

mdadm: added /dev/sdb1

root@debian:~# mdadm --manage /dev/md127 --add /dev/sdb2

mdadm: added /dev/sdb2

Warning

Wait for all arrays to finish synchronising before proceeding.

Check the progress like so:

cat /proc/mdstat

Grow the file systems¶

First increase the size of the RAID arrays to use all available space on their disks:

root@debian:~# mdadm --grow /dev/md125 --size=max

mdadm: component size of /dev/md125 has been set to 17807360K

root@debian:~# mdadm --grow /dev/md126 --size=max

mdadm: component size of /dev/md126 has been set to 1047552K

Note

There is no need to grow /dev/md127 (swap) as this has not changed in size.

Secondly, grow the file systems on these devices to use all available space, starting with /boot:

root@debian:~# e2fsck -f /dev/md126

e2fsck 1.44.0 (7-Mar-2018)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

/dev/md126: 334/124928 files (0.9% non-contiguous), 125673/499712 blocks

root@debian:~# resize2fs /dev/md126

resize2fs 1.44.0 (7-Mar-2018)

Resizing the filesystem on /dev/md126 to 1047552 (1k) blocks.

The filesystem on /dev/md126 is now 1047552 (1k) blocks long.

Finally /dev/centos/root:

root@debian:~# pvresize /dev/md125

Physical volume "/dev/md125" changed

1 physical volume(s) resized / 0 physical volume(s) not resized

root@debian:~# lvresize -l +100%FREE /dev/centos/root

Size of logical volume centos/root changed from 15.00 GiB (3840 extents) to 16.98 GiB (4347 extents).

Logical volume centos/root successfully resized.

root@debian:~# resize2fs /dev/centos/root

esize2fs 1.44.0 (7-Mar-2018)

Resizing the filesystem on /dev/centos/root to 4451328 (4k) blocks.

The filesystem on /dev/centos/root is now 4451328 (4k) blocks long.